Process Synchronization

What is a Process Synchronization?

Processes Synchronization is the way by which processes that share the

same memory space are managed in an operating system. It helps

maintain the consistency of data by using variables or hardware so

that only one process can make changes to the shared memory at a time.

Example:

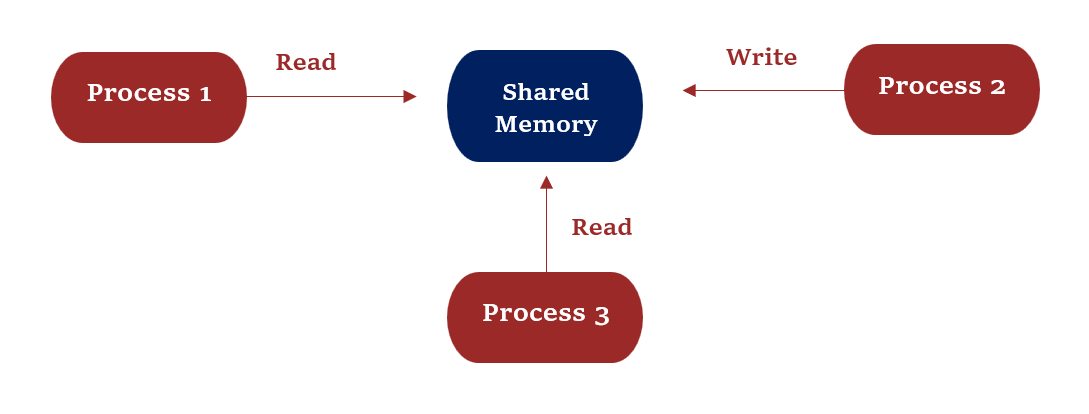

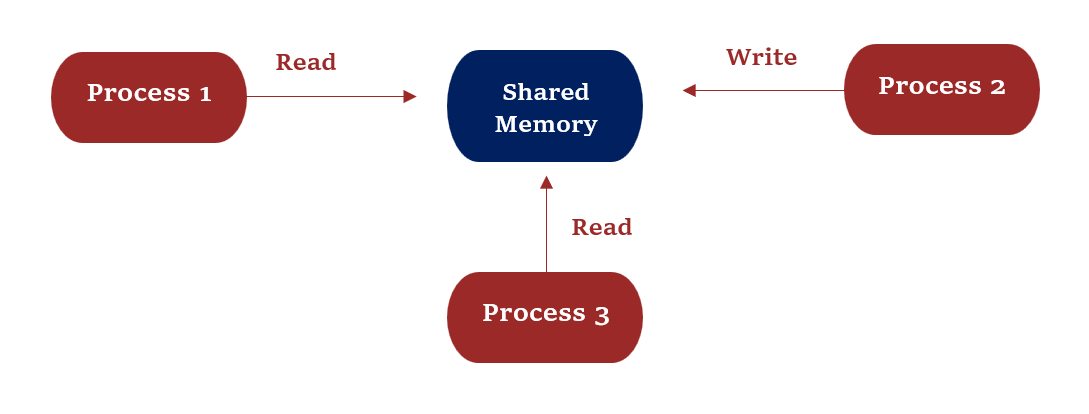

Let us take a look at why exactly we need Process Synchronization. For

example, if a process1 is trying to read the data present in a memory

location while another process2 is trying to change the data present

at the same location, there is a high chance that the data read by the

process1 will be incorrect.

Inconsistency of data can occur when various processes share a common resource in a system which is why there is a need for process synchronization in the operating system.

On the basis of synchronization, processes are categorized as one of the following two types:

1] Independent Process: The execution of one process does not affect the execution of other processes.

2] Cooperative Process: A process that can affect or be affected by other processes executing in the system.

Concepts in Process Synchronization:

• Race Condition :

When more than one process is executing the same code or accessing the

same memory or any shared variable in that condition there is a

possibility that the output or the value of the shared variable is

wrong so for that all the processes doing the race to say that my

output is correct this condition known as a race condition.

• Critical Section Problem :

A critical section is a code segment that can be accessed by only one

process at a time.

The critical section contains shared variables that need to be

synchronized to maintain the consistency of data variables.

So the critical section problem means designing a way for cooperative

processes to access shared resources without creating data

inconsistencies.

Any solution to the critical section problem must satisfy three

requirements:

• Mutual Exclusion :

If a process is executing in its critical section, then no other

process is allowed to execute in the critical section.

• Progress :

If no process is executing in the critical section and other

processes are waiting outside the critical section, then only those

processes that are not executing in their remainder section can

participate in deciding which will enter in the critical section

next, and the selection cannot be postponed indefinitely.

• Bounded Waiting :

A bound must exist on the number of times that other processes are

allowed to enter their critical sections after a process has made a

request to enter its critical section and before that request is

granted.

• Peterson’s Solution :

Peterson’s Solution is a classical software-based solution to the

critical section problem.

In Peterson’s solution, we have two shared variables:

1] boolean flag[i]: Initialized to FALSE, initially no one

is interested in entering the critical section

2] int turn: The process whose turn is to enter the

critical section.

• Semaphores :

A semaphore is a signaling mechanism and a thread that is

waiting on a semaphore can be signaled by another thread. This

is different than a mutex as the mutex can be signaled only by

the thread that is called the wait function. A semaphore uses

two atomic operations, wait and signal for process

synchronization. A Semaphore is an integer variable, which can

be accessed only through two operations wait() and signal().

There are two types of semaphores: Binary Semaphores and

Counting Semaphores.

Any solution to the critical section problem must satisfy three requirements:

• Mutual Exclusion :

If a process is executing in its critical section, then no other process is allowed to execute in the critical section.

• Progress :

If no process is executing in the critical section and other processes are waiting outside the critical section, then only those processes that are not executing in their remainder section can participate in deciding which will enter in the critical section next, and the selection cannot be postponed indefinitely.

• Bounded Waiting :

A bound must exist on the number of times that other processes are allowed to enter their critical sections after a process has made a request to enter its critical section and before that request is granted.

• Peterson’s Solution :

Peterson’s Solution is a classical software-based solution to the

critical section problem.

In Peterson’s solution, we have two shared variables:

1] boolean flag[i]: Initialized to FALSE, initially no one

is interested in entering the critical section

2] int turn: The process whose turn is to enter the

critical section.

• Semaphores :

A semaphore is a signaling mechanism and a thread that is

waiting on a semaphore can be signaled by another thread. This

is different than a mutex as the mutex can be signaled only by

the thread that is called the wait function. A semaphore uses

two atomic operations, wait and signal for process

synchronization. A Semaphore is an integer variable, which can

be accessed only through two operations wait() and signal().

There are two types of semaphores: Binary Semaphores and

Counting Semaphores.

A semaphore is a signaling mechanism and a thread that is waiting on a semaphore can be signaled by another thread. This is different than a mutex as the mutex can be signaled only by the thread that is called the wait function. A semaphore uses two atomic operations, wait and signal for process synchronization. A Semaphore is an integer variable, which can be accessed only through two operations wait() and signal(). There are two types of semaphores: Binary Semaphores and Counting Semaphores.